The Future is Fun to Predict...

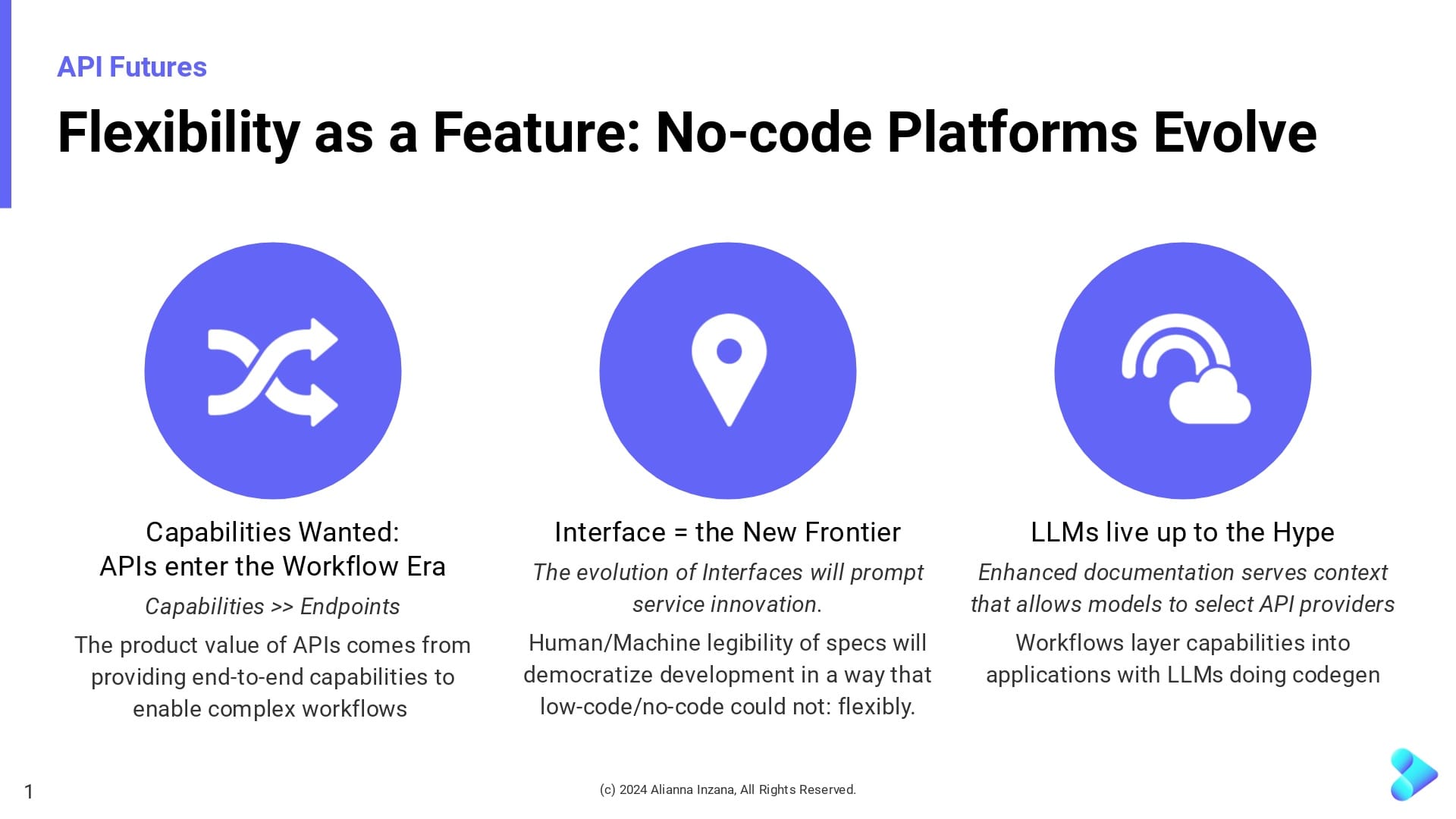

The future is fun to predict - especially when not much is riding on the outcome and the possibilities are vast. In a start-of-year blog post, the stakes may seem low, but perhaps a few well-chosen words can speak-to-power elements of an API future that we, as its builders, want to inhabit. I could have chosen one of the more-certain aspects of API Futures - but it may be more interesting to go with a moonshot: APIs will democratize development via no-code platforms.

The Promise of No-Code

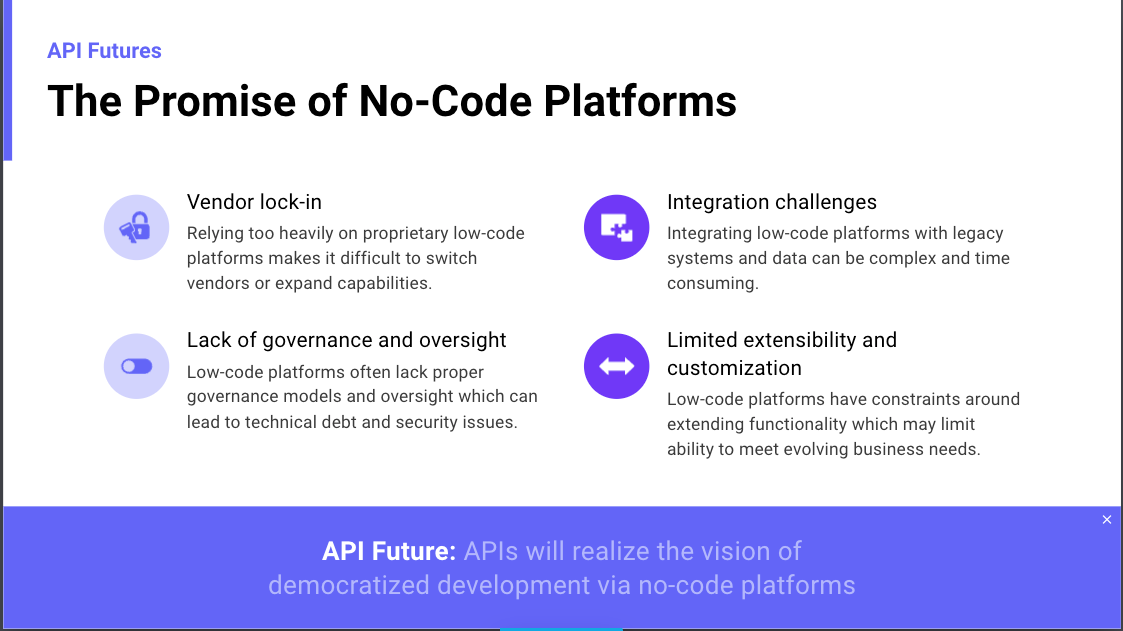

No-code platforms promised to democratize app development, and to some extent, they did. But what these platforms could not do was provide their users flexibility and choice. The bane of the low-code/no-code platform is extensibility (or rather, a lack thereof). Integrating with legacy systems remains a challege, which is at best inconvenient, and at worst - a data-leakage/security issue. There is still considerable vendor lock-in and most generic platforms struggle to offer their customers real options for customization. This may be why some of the most successful low-code/no-code solutions focus on specific business domains: it allowed those platforms’ developers to prioritize integrations and capabilities that similar customers request.

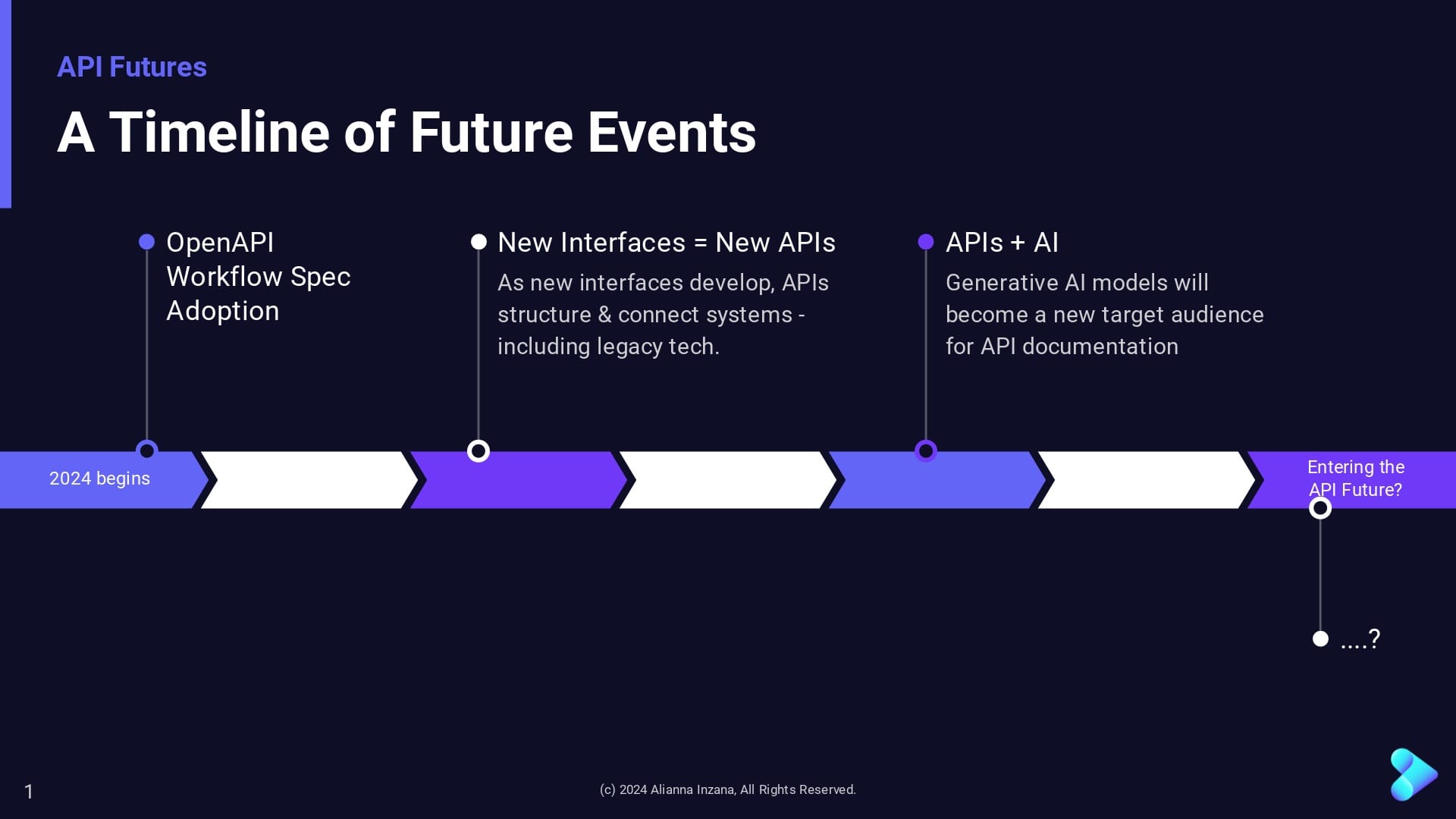

In 2024, we are still quite far off from a future where ‘Citizen Developers’ build anything they can imagine without writing a line of code. As the year unfolds – watch for sign-posts on a timeline of API future events. They will pave the way for API-based solutions to low-code/no-code platform shortcomings: by combining beautifully-designed APIs with Large Language Models (LLMs) trained on enhanced documentation.

Capabilities Wanted: APIs enter the workflow era

APIs are so ubiquitous that it is difficult for most engineers to imagine building without them. In many organizations, they begin as a “How” - as in, “How do I get these applications to talk with one another?” or “How can our business scale?”. As the business value of a specific API is shared to other teams, we begin to document, version, and manage those services.

Specifications (such as the OAS formerly known as Swagger) gave the industry its first taste of standardization in a format that was both machine- and human- readable (though I joke that it is only for a certain value of human). The machine-readableness lent itself to establishing tooling – and it may have over-corrected us into focusing on ‘building the API right’. This is a noble goal – but it isn’t nearly as important as ‘building the right API’.

Engineers don’t integrate endpoints, they onboard capabilities. No one is looking for an endpoint that delivers a list of mobile numbers (except maybe hackers) – they need the capability of sending SMS messages to their customers via a service.

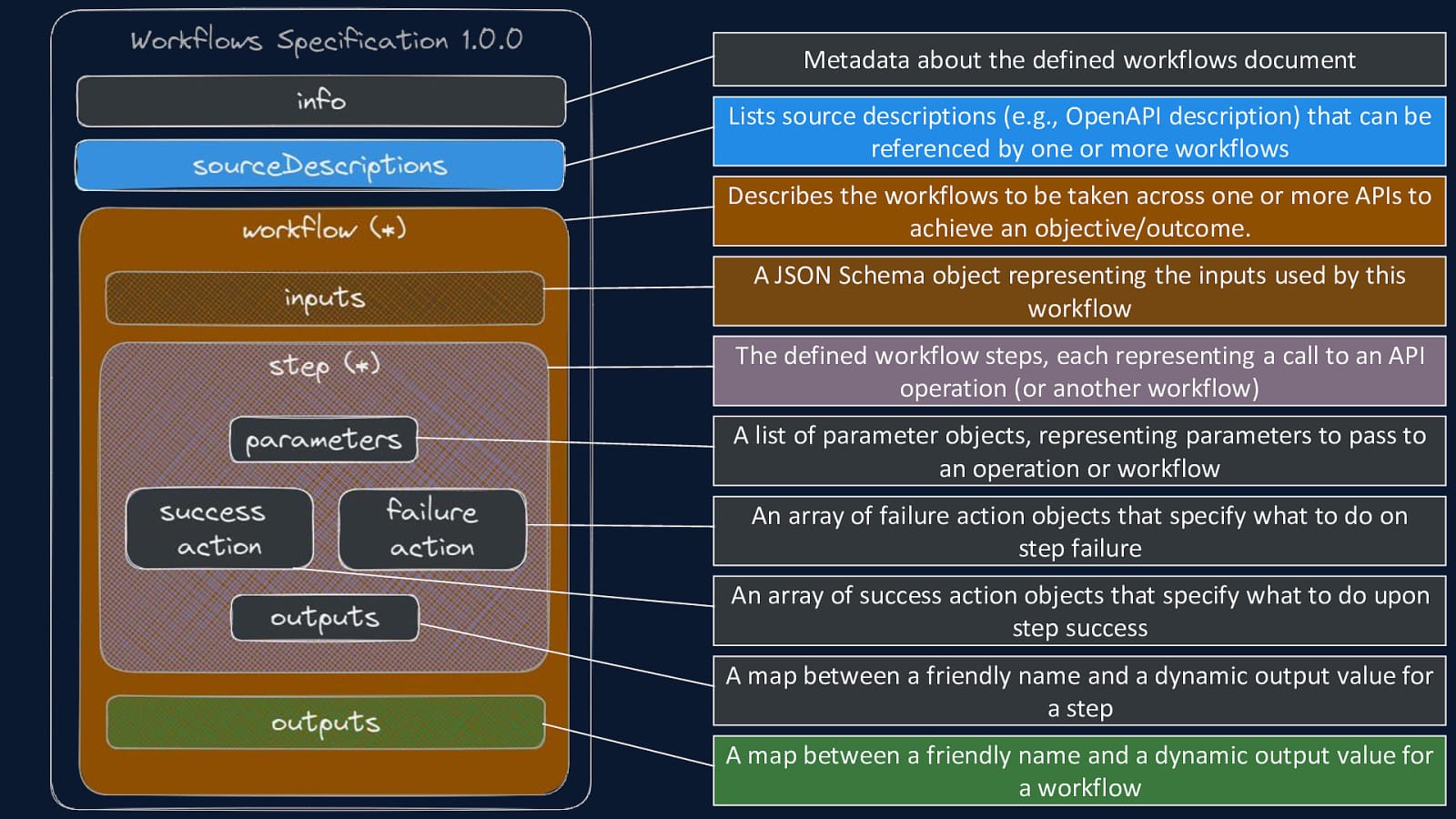

One of the forces supporting the shift in conversation from endpoints to capabilities is the new workflow specification from the OpenAPI Initiative working group. The workflow specification is “a specific sequence of calls to achieve a particular goal in the context of an API definition”. It provides important operational info that can be interpreted by both machines and humans – making it far easier for consumers to engage with the capabilities offered. Adoption of the workflow spec is the first sign-post on the timeline of API Future events because the context it provides will inform LLMs of a service’s foundational capabilities and provide a means of programmatically extending existing applications, once the safety and compatibility of the service is determined.

Interface: the Next Frontier

The next event in our timeline is a recurrent API theme: new interfaces will increase our reliance on APIs. On the web, GUI-based forms provide users with guidance and input validation that newer interfaces, like voice, lack. They rely on APIs to interpret and structure data, then to return responses from a multitude of connected external services for the weather, news, music, or even shopping (a use case that has seen only moderate growth - a 2021 study suggested less than 12% of Alexa owners bought something via the interface).

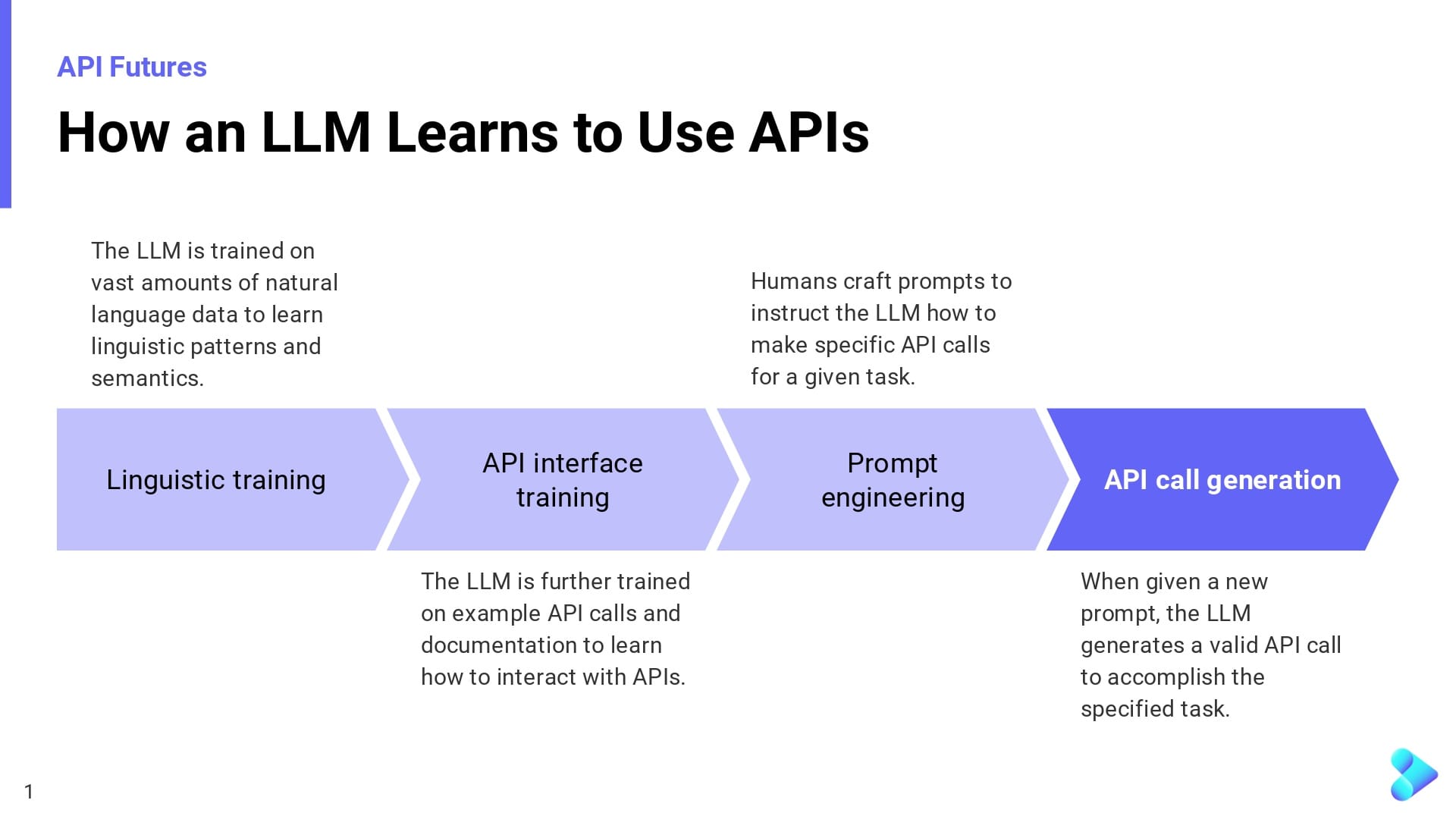

Large Language Models will augment voice-based interfaces by allowing for more than just a call-and-response. Using OpenAI functions, a LLM can learn how to call APIs based on a user prompt. The penultimate entry on our timeline of future events will be when companies begin to focus on LLMs as consumers of ‘enhanced’ API documentation.

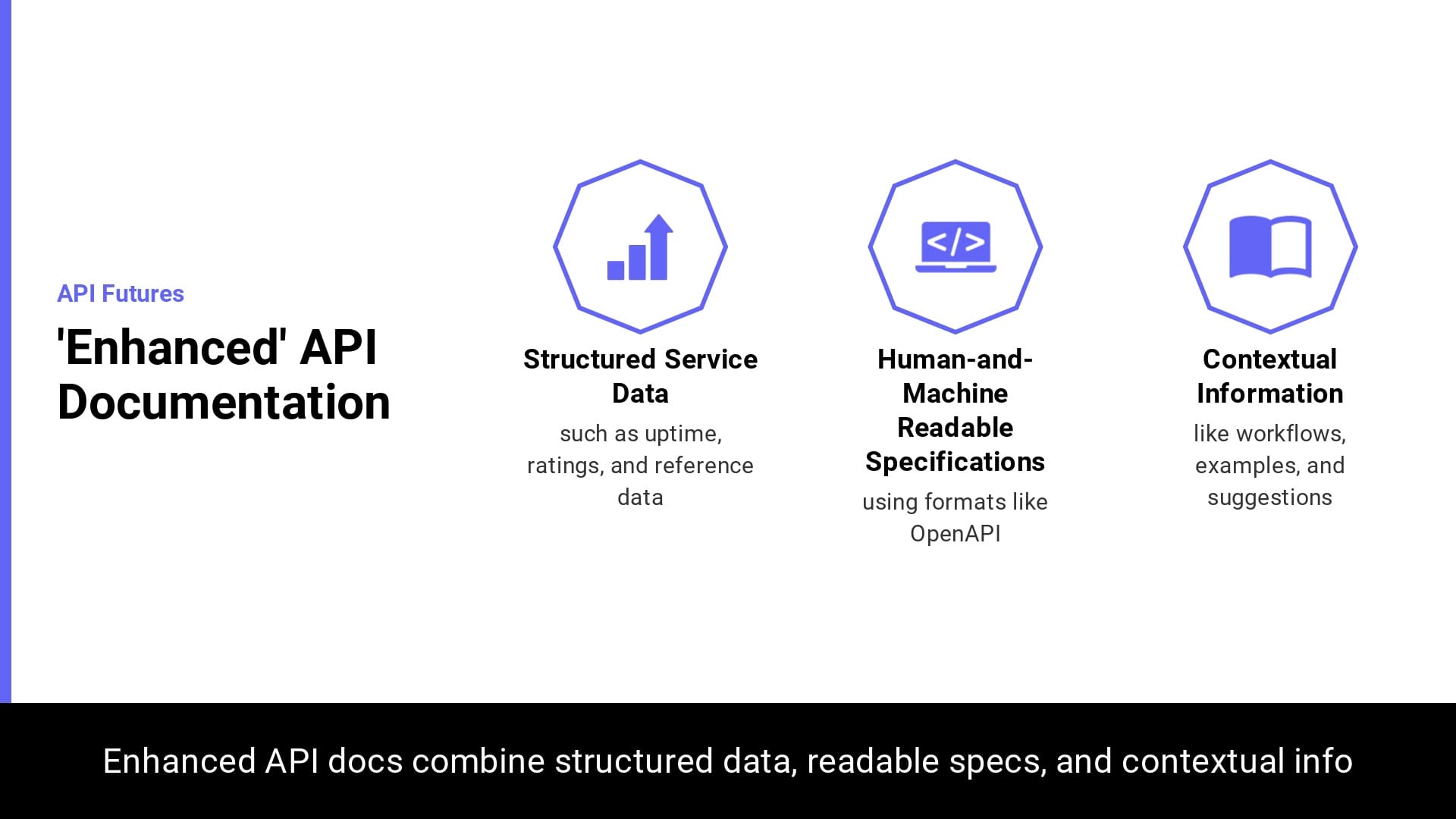

API product companies like Stripe or Twilio realized significant benefit from making documentation a feature, not an afterthought. Enhanced API documentation unifies:

- Structured service data (such as - uptime, ratings, reference info),

- Human-and-machine readable specification formats, and

- Unstructured contextual information (examples, narrative text, suggestions for when/how to use, what problems the API was intended to solve, etc).

Moving Past the 'Pinnacle'

The un-attenuated hype around LLMs in 2023 led us quickly to the “Pinnacle of Inflated Expectations” with the technology. As anyone who has been asked to contribute to training LinkedIn’s model via ‘collaborative article’ contributions knows, generative AI has a distinctive, if unoriginal, voice. It is safe to agree that ChatGPT won’t be writing our term papers nor drafting legal opinions any time soon - especially without human review. But within these limitations, we see what those models are uniquely suited to do: synthesize large amounts of information and recognize patterns within it.

Some initial work on bringing together multiple capabilities to build applications has happened with LangChain - which pre-packages Components (tools for working with LLMs) and off-the-shelf chains (for higher-level tasks). LangChain is designed to help build apps that are context-aware, and then connect up the relevant APIs. In the API space – we could train the LLM with metadata and descriptions provided by enhanced documentation. The model may then be able to infer how to make a context-aware choice between several providers of a particular capability, or generate code that connects and deploys layered capabilities from multiple providers of well-documented services.

Flexibility as a Feature

Which brings us back to the as-yet-unfulfilled promise of no-code platforms: democratizing app development. If the future unfolds as I envisioned, we will watch the pattern of new interfaces commanding new APIs continue – we will see the API community speak the language of workflows through their specifications. The workflow specs will provide a new surface for training interactions with LLMs, while enhanced documentation will layer in API metadata and related unstructured content from a variety of sources. Specialized LLMs will evolve to sift through numerous capability-providers. When prompted, they will make the best context-aware choice based on the prompt engineer’s needs. Other models will learn how to generate code that wires together multiple capabilities into an app - and these will be integrated into low-code/no-code platforms, enhancing the flexibility of the offering. This is not the work of a year - not even two - but the seeds of the technology to build it is there. In that API Future – the Citizen Developer learns by asking better questions – and the community of builders expands, until anyone can build an entire application without writing a single line of code.