This is a story of a lengthy debugging process and a simple solution for a problem that we were facing at WeTransfer. A few weeks ago, we started rolling out our new storage manager, Storm. As we increased the rollout percentage, we noticed a problem that was affecting its performance. Storm is written in Ruby and uses AWS gems to interact with S3 and SQS. It's worth saying that I'm an experienced Ruby engineer but not so familiar with the AWS ecosystem.

The problem

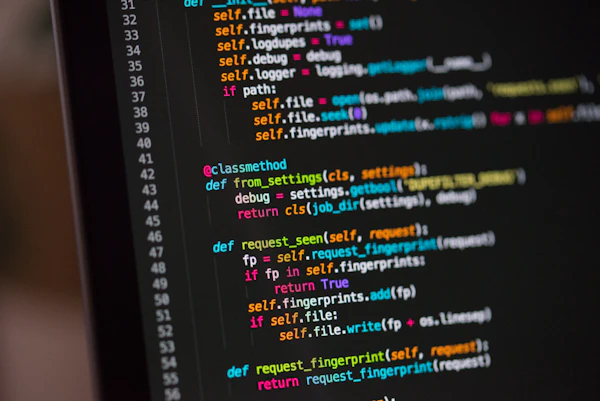

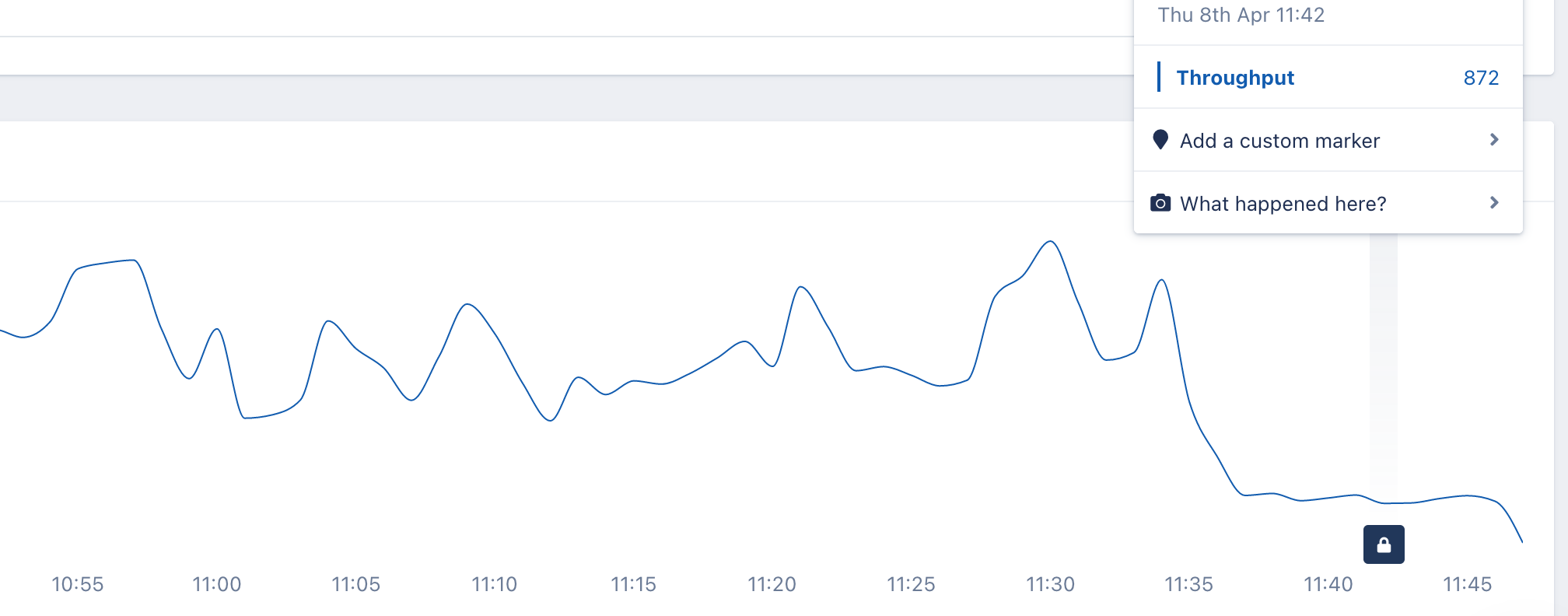

The problem started when we noticed that some HTTP requests to the AWS metadata endpoint were taking too long to complete:

If I only had the AppSignal data shown above to go on, it would have taken me much more time to find the origin of these requests because as you can see, AppSignal does not show this (we did request that feature!).

Luckily, we have many engineers at WeTransfer with loads of AWS experience under their belts. This saved me a ton of time, because I quickly learned that AWS gems use this endpoint to get credentials and interact with AWS services. There are more details about how it works on the AWS website.

So, my first goal was to understand how the AWS gem managed credentials and when it made those requests.

Understanding how the AWS gem works

Storm has many AWS gems in its Gemfile.lock. I wanted to find out in which one these requests were made.

I started using a simple grep command in the staging console as follows:

~/storm $ bundle info aws-sdk-core

* aws-sdk-core (3.114.0)

Path: /usr/local/bundle/gems/aws-sdk-core-3.114.0

~/storm $ grep -r 169.254.169.254 /usr/local/bundle/gems/

/usr/local/bundle/gems/aws-sdk-core-3.114.0/lib/aws-sdk-core/instance_profile_credentials.rb: # @option options [String] :ip_address ('169.254.169.254')

/usr/local/bundle/gems/aws-sdk-core-3.114.0/lib/aws-sdk-core/instance_profile_credentials.rb: @ip_address = options[:ip_address] || '169.254.169.254'

/usr/local/bundle/gems/aws-sdk-core-3.114.0/lib/aws-sdk-core/ec2_metadata.rb: # @option options [String] :endpoint (169.254.169.254) The IMDS endpoint.

/usr/local/bundle/gems/aws-sdk-core-3.114.0/lib/aws-sdk-core/ec2_metadata.rb: @endpoint = options[:endpoint] || '169.254.169.254'Note: I ran grep in staging because there are only Storm dependencies (gems) installed in this container. If I had grepped it on my laptop, it would have searched in gems that I use in other projects too.

So, I found a file to start the investigation: aws-sdk-core/instance_profile_credentials.rb.

First, I added a raise '----here----' in the method open_connection that makes the HTTP request:

def open_connection raise '----here----' http = Net::HTTP.new(@ip_address, @port, nil) #...Note: I used RSpec's option --fail-fast to abort the execution in the first failure.

Note: To change the gem's code, I opened it in my text editor with the command bundle open aws-sdk-core.

Note: I recommend running bundle pristine after modifying gems code to restore their original files after these experiments.

My goal was to find a test scenario that I could run and debug it locally. Unfortunately, when I ran the tests, no one reached this point.

After that, there were 2 possibilities:

- no tests were covering this code path

- the class

InstanceProfileCredentialswas being used only in production

Then, I started to search where this class was referenced inside the aws-sdk-core gem and I found it in the file lib/aws-sdk-core/credential_provider_chain.rb:

def instance_profile_credentials(options)

if ENV['AWS_CONTAINER_CREDENTIALS_RELATIVE_URI']

ECSCredentials.new(options)

else

InstanceProfileCredentials.new(options) # <------ here

end

endSo, I added a raise there, ran the tests again, but nothing happened.

def instance_profile_credentials(options)

raise 'aaaaaaaaaaa'

if ENV['AWS_CONTAINER_CREDENTIALS_RELATIVE_URI']

ECSCredentials.new(options)

else

InstanceProfileCredentials.new(options) # <------ here

end

endI kept going up in the stack trace, trying to find a point where I could start debugging it on my laptop.

Fortunately, I found a point in the next iteraction, in the method providers:

def providers

raise 'aaaaaaaaaaa'

[

[:static_credentials, {}],

[:static_profile_assume_role_web_identity_credentials, {}],

[:static_profile_sso_credentials, {}],

#...

[:instance_profile_credentials, {

retries: @config ? @config.instance_profile_credentials_retries : 0,

http_open_timeout: @config ? @config.instance_profile_credentials_timeout : 1,

http_read_timeout: @config ? @config.instance_profile_credentials_timeout : 1

}]

]

endAt this point, I was able to add a breakpoint (binding.pry), run the test that had failed, and debug it.

I'm a fan of the pry-byebug gem because it's possible to use commands such as up, down, step, next and finish to debug. This makes finding what you're looking for a lot faster.

After adding the breakpoint, I ran the failing test and used the command up to navigate in the stack trace to find which method was calling the method providers, then I found the method resolve.

def resolve

providers.each do |method_name, options|

provider = send(method_name, options.merge(config: @config))

return provider if provider && provider.set?

end

nil

endNote: Using the up command is much easier than trying to find a method reference with a text search.

So, it seemed that this method resolve checks which credential provider is configured in the current environment, and for the test environment, it was using the AssumeRoleCredentials one. This provider is used when we set AWS environment variables like AWS_PROFILE or AWS_ACCESS_KEY_ID and AWS_SECRET_KEY.

First idea to resolve

After learning this, I asked myself why can't we use static credentials in production, by setting those environment variables, as we use in the test environment. If we did, those HTTP requests would go away and I could finish my investigation. But my teammates explained why we can't, which is also described in the AWS docs:

"It's challenging to securely distribute credentials to each instance, especially those that AWS creates on your behalf, such as Spot Instances or instances in Auto Scaling groups. You must also be able to update the credentials on each instance when you rotate your AWS credentials."

So, my first idea was dropped.

Back to the investigation

My next goal was to find which line of our code triggered the eventual execution of the resolve method.

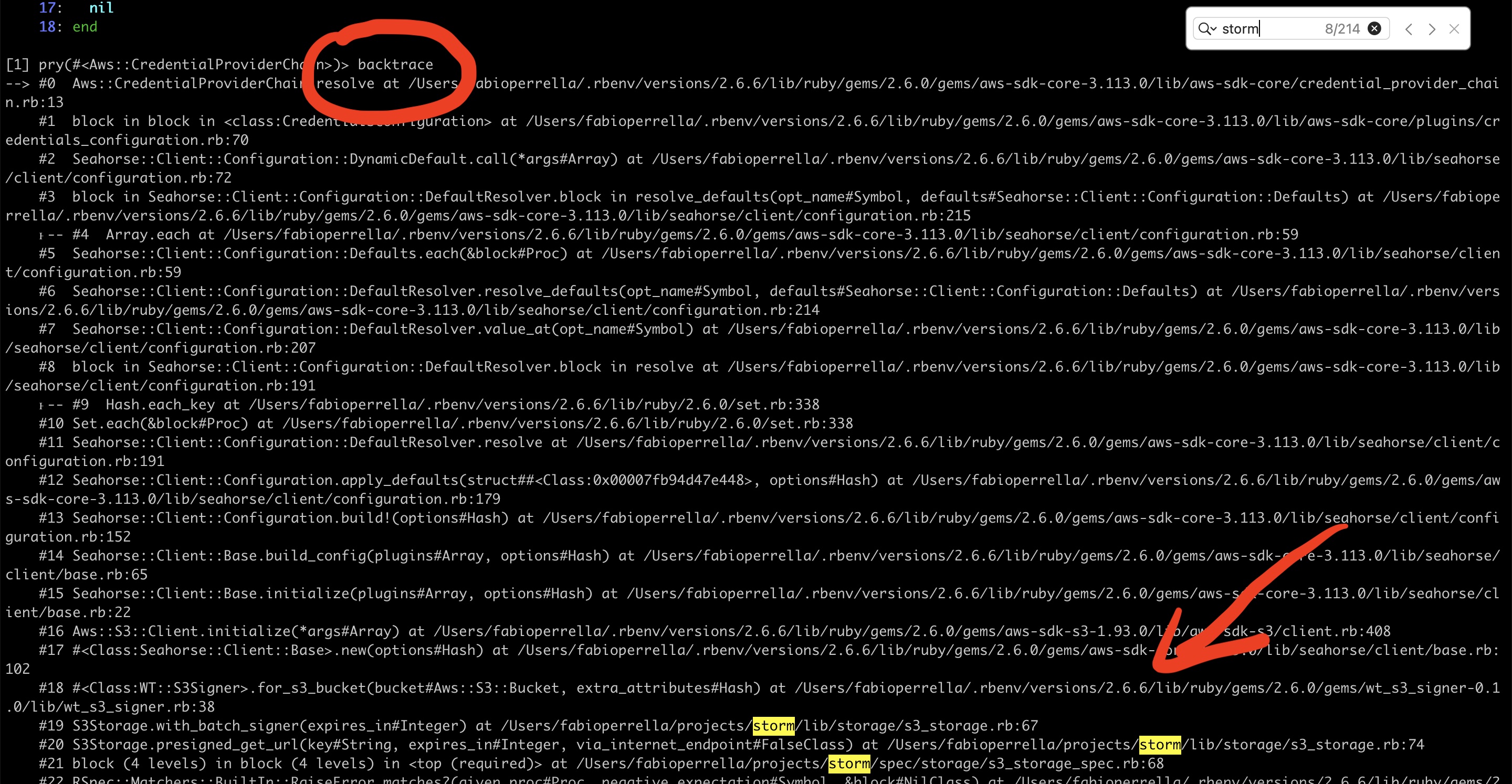

I used the backtrace command (from pry-byebug) and searched for storm:

Note: Thanks iTerm2 for having this text search with highlight! I was a Linux user in the past, and there was no such feature on the terminal there.

I found this line in Storm:

s3_signer = WT::S3Signer.for_s3_bucket(bucket, expires_in: expires_in)Which was calling this line inside the gem wt_s3_signer:

client = Aws::S3::Client.new#...credentials = client.config.credentialsI realised that every time a new client is instantiated, it needs to fetch credentials again. And it dawned on me that this could be the reason for all those HTTP requests!

We had assumed the AWS gems to cache credentials in some way, because they expire only after some time. So, I started to investigate how the aws-sdk-core gem caches credentials and I found the module RefreshingCredentials which defines the method credentials:

def credentials

refresh_if_near_expiration

@credentials

endThis method is pretty clear. It refreshes the credentials, when necessary, and returns them. When it's not necessary to refresh it, it only returns @credentials, which is cached in the instance.

This confirmed my theory! If we instantiate new client objects, there will be no cached @credentials and it will fetch a fresh set.

So I started searching the AWS docs to see if they mention something about credential caching. It would have helped me a lot if I had found something like: "We recommend using a singleton instance for clients, otherwise credentials will not be cached or reused and your application will spend time fetching new credentials every time." But I didn't.

Proving my theory

To prove my theory, I wanted to execute the credential methods and confirm that the HTTP requests were made only for fresh client instances. It was not possible to reproduce this on my laptop, because as I showed before, it uses a different credentials provider. My only option was to debug on the staging server, and there was no pry-byebug available on this environment.

This is where Ruby metaprogramming came to the rescue!

I created the following monkey patch to print some logs inside the AWS gem:

module Aws::RefreshingCredentialsMetrics

def refresh

puts "------------refresh"

super

end

def refresh!

puts "------------refresh!"

super

end

private

def http_put(*args)

puts "--------------- http_put"

super(*args)

end

def http_get(*args)

puts "--------------- http_get"

super(*args)

end

end

class Aws::InstanceProfileCredentials

prepend Aws::RefreshingCredentialsMetricsend

Aws::S3::Client.new.config.credentialsI pasted that into the staging Storm console and got the following result:

------------refresh

--------------- http_put

--------------- http_get

--------------- http_get

=> #<Aws::InstanceProfileCredentials:0x000055fa19d78f20...Then, I tried to call credentials using the same client:

client = Aws::S3::Client.new

------------refresh

--------------- http_put

--------------- http_get

--------------- http_get

client.config.credentials

=> #<Aws::InstanceProfileCredentials:0x000055fa1a21f880...

client.config.credentials

=> #<Aws::InstanceProfileCredentials:0x000055fa1a21f880It didn't make the HTTP requests again, as I suspected. I also noticed that these objects had a expiration date:

client.config.credentials.expiration=> 2021-04-25 19:25:47 UTCSo it seemed safe to use the cached credentials.

We have a plan!

At this point, my plan was to change the wt_s3_signer gem to not create an instance of Aws::S3::Client on each call to WT::S3Signer.for_s3_bucket.

But, when I opened the PR, my team warned me that maybe we should use a connection pool instead of using a singleton because we were not sure that the AWS gems were thread-safe.

We found this issue confirming that the gem aws-sdk-core was thread-safe. We assumed that the other gems (S3 and SQS) followed the same pattern and we chose to use a singleton for the clients.

However, as we were not 100% confident about the thread-safety, we agreed on the following plan:

- Change

wt_s3_signergem to acceptAws::S3::Clientby dependency injection optionally. With that, it would be possible to test this new approach in Storm without breaking gem compatibility - Update

wt_s3_signerin Storm and inject the singleton instance ofAws::S3::Clientintowt_s3_signer - After we are sure that no problems happened, change

wt_s3_signerto create the singletonAws::S3::Clientinternally, by default

First results

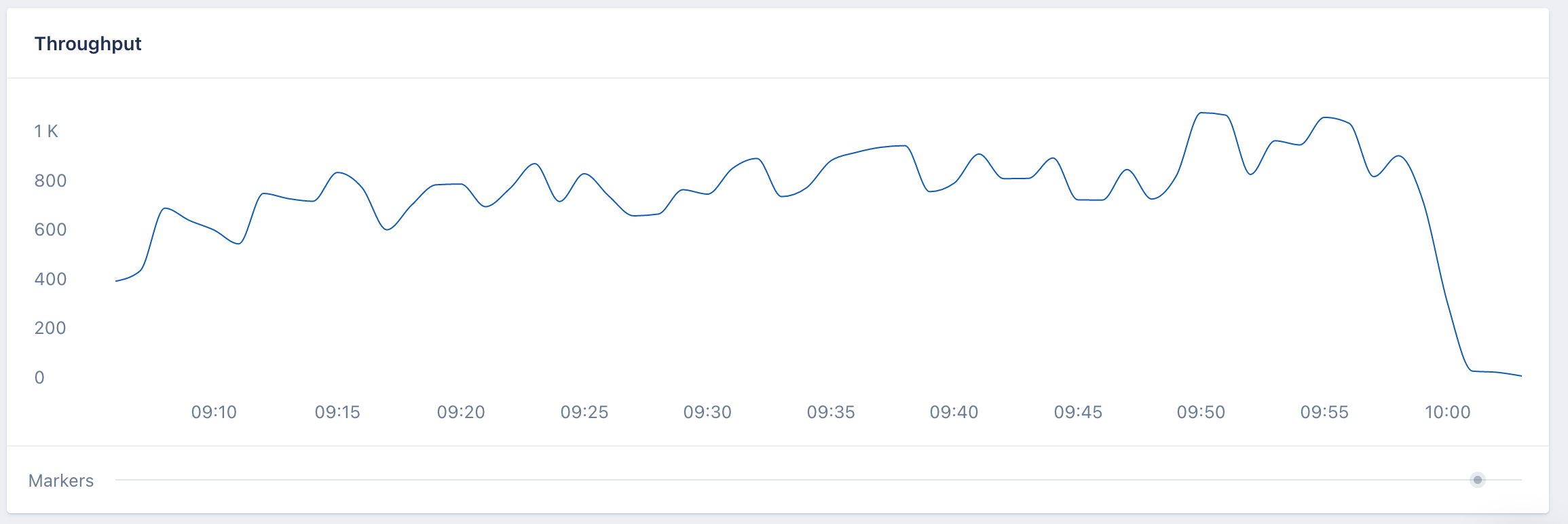

After doing steps 1 and 2, we looked again at the graph that shows the number of HTTP requests to the AWS endpoint on AppSignal, hoping it would be close to zero. Unfortunately we only reduced the number of requests:

I realised there could be more places instantiating AWS clients. So I repeated the same strategy to find other places, and I did:

bucket = Aws::S3::Bucket.new(@bucket_name)

bucket.objectIn this case, the method Aws::S3::Bucket.new accepts a client as an argument, but the default is to create a new instance. So we changed this to:

bucket = Aws::S3::Bucket.new(

@bucket_name,

client: singleton_s3_client)

bucket.objectI also found this line inside the sqewer gem:

client = Aws::SQS::Client.newclient.receive_messageFor sqewer we used the same 3-steps strategy that we used for wt_s3_signer.

The finish line!

Finally, after fixing those 3 pieces of code interacting with AWS credentials, the number of requests dropped almost to zero!

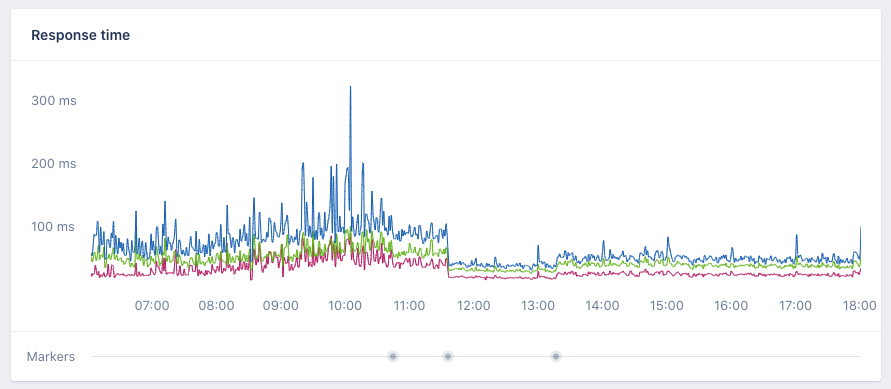

However, the most impressive result was the performance boost the we gained, which was not expected:

Almost 2.5 times lower response times!

Conclusion

Ruby Gems are great, but we need to use them properly. Fortunately, when we don't understand what is happening under the hood we can debug these gems, either by using binding.pry or metaprogramming.

Don't shy away from trying these methods! You can learn a lot by digging deep into gems.